Simplify Model APIs with OpenRouter

If you’ve been exploring AI tools recently, you’ve probably come across OpenRouter.io. It’s a powerful platform that simplifies how developers and creators access a wide range of AI models and it pairs perfectly with Msty.

In this post, we’ll walk you through what OpenRouter is, why it’s an excellent choice for managing multiple models through a single endpoint, and how to connect it to Msty. We’ll also touch on some of the unique benefits (and trade-offs) of using OpenRouter-hosted models.

What is OpenRouter.io?

OpenRouter is like an API cafeteria for large language models. Instead of registering and managing API keys from OpenAI, Anthropic, Mistral, and others separately, you can route your requests through OpenRouter using one API key.

This makes it a breeze to switch between various LLM providers and test new ones — whether you’re using GPT-4, Claude, Mistral, or niche models like Qwen or Deepseek.

How to Use OpenRouter with Msty.ai

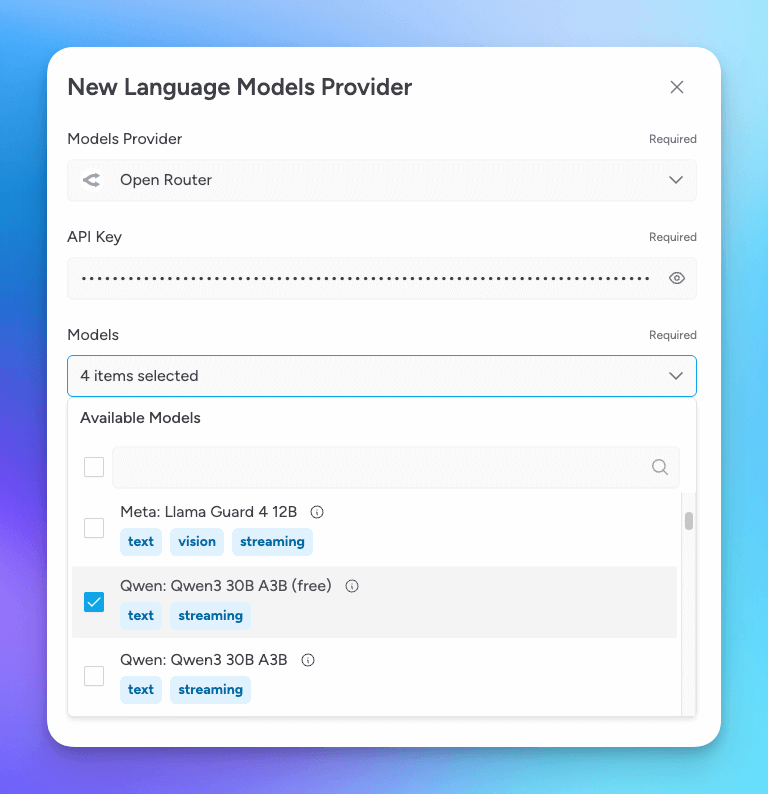

Msty lets you bring your own API key, and that includes OpenRouter keys. Here’s how to connect the two:

- Sign up at OpenRouter.io and get your API key.

- In Msty, select Model Hub and add a new provider for OpenRouter.

- Paste your OpenRouter key where it asks for an API key.

- Select which models you'll want to use in Msty.

That’s it. Msty will now route your requests through OpenRouter, unlocking access to dozens of models instantly.

Local-ish Models, Hosted for You

OpenRouter also gives you access to models that typically run locally — like Qwen or Deepseek — but they host them in the cloud. This means you can tap into the performance benefits of these models without the need to install or manage them locally. And because they’re running on OpenRouter’s servers, you may actually see better performance than if you were running them on your own device.

However, keep in mind: these models are hosted on OpenRouter’s servers, so while they’re not big corporate models, your data still passes through third-party infrastructure. It’s worth weighing privacy implications if you’re working with sensitive information.

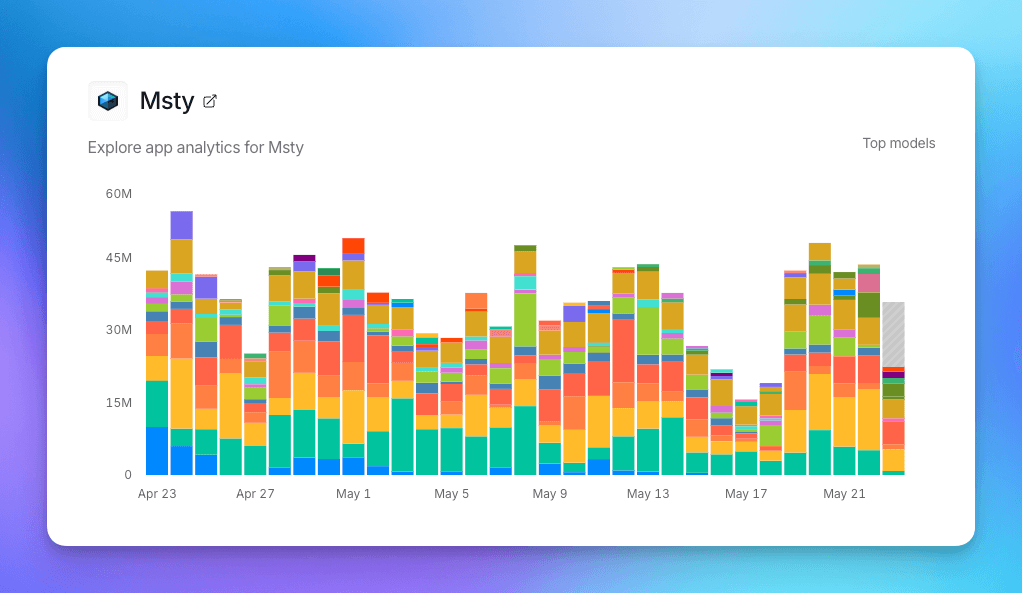

See What’s Trending: Msty x OpenRouter Model Leaderboard

If you’re exploring which models to use with Msty, OpenRouter offers a helpful resource: a live leaderboard showcasing the most-used models specifically by Msty users who have connected their OpenRouter API keys.

This leaderboard provides valuable insight into which models are currently popular within that segment of the Msty community, from cutting-edge options like Claude and GPT-4 to high-efficiency alternatives like Mistral and Qwen.

Whether you're looking to follow trends, test high-performing models, or simply see what others are using, the leaderboard is a great way to stay informed and make more confident decisions when configuring your Msty workspace.

Get Started with Msty Studio

Msty Studio Desktop

Full-featured desktop application

✨ Get started for free

Subscription required